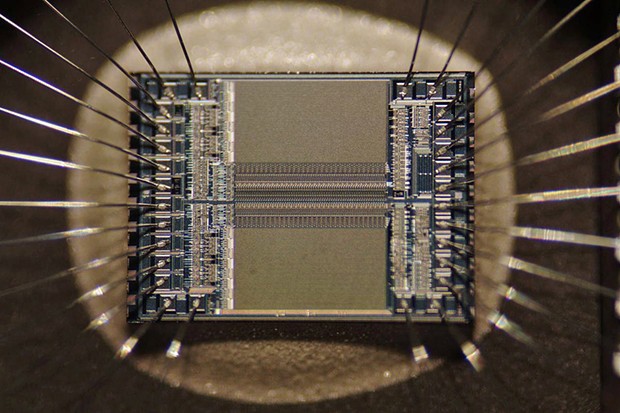

Image by Zephyris, via Creative Commons

EPROM microchip die showing detail of an integrated circuit. Researchers doubt that IC chips can be manufactured beyond the 3-nanometer scale.

[

{

"name": "Top Stories Video Pair",

"insertPoint": "7",

"component": "17087298",

"parentWrapperClass": "fdn-ads-inline-content-block",

"requiredCountToDisplay": "1"

}

]

Last time, I discussed the possibility that pure science has reached some fundamental limits ("Is Science Done For? Part 1: Pure Science," Sept. 26). This "end of science" idea was popularized (and employed as a title) by science writer John Horgan in his 1996 book. As I noted, Horgan claimed, "the era of truly profound scientific revelations about the universe and our place in it is over." He considered a broad range of disciplines that collectively come under the rubric "pure science," including physics, cosmology, evolutionary biology, social science, philosophy, neuroscience, chaos/complexity theory and machine science. Taking them one by one, he suggested that all the big epiphanies had already been made. Other than the discovery of "dark energy" in 1998, he nailed it. Nothing comparable to, for instance, quantum mechanics, relativity or DNA, has surfaced for decades.

What about applied science, that is, technology? Has that advanced dramatically since 1996? Mostly, no, with the singular exception of digital technology. The fact that I'm in a coffee shop writing this column on a palm-size iPhone with a wireless keyboard, researching in real time using Google, Wikipedia and the like, speaks to the success of digital/information technology. (Remember typewriters and libraries?)

Note, however, that "pure science" foundations leading to computer technology were established long ago by such worthies as Charles Babbage and Ada Lovelace in the 19th century, and Alan Turing and John von Neumann in the 20th. For all the gee-whiz features of IT today, the basic technology was already in place 60 years ago. As evidence, the computer that successfully guided Apollo astronauts to and from the moon was designed and built in the mid-1960s.

As one indication that technology is coming up against fundamental limits, take Moore's "Law," Gordon Moore's 1965 speculation (revised in 1975) that the density of computer chips in an integrated circuit doubles every two years. So far, so good, except that (1) the number of researchers required today to achieve this doubling is nearly 20 times larger than the number required in the early 1970s, and (2) physically, chip designers are approaching physical quantum limits in downsizing beyond the "3 nanometer" scale. (Viable "quantum computers" are still many years away.)

Let's look at some of what, to my mind, are increasingly frantic attempts to prove Horgan wrong in other realms of technology:

Cancer treatment. Far from winning the "war," in terms of overall deaths we're no better off now than when the first edition of The End of Science came out, and we're certainly over-prescribing and over-diagnosing (see "Cancer, Part 1: The Unwinnable War," Jan. 17). Ditto psychology.

Prescription drugs. The number of new drugs approved per billion U.S. dollars spent on R&D has halved roughly every nine years since 1950.

CRISPR and gene therapy. Still awaiting real-world payoffs.

Electric cars. Invented in the 1880s; better motors and batteries now, thanks to new materials and manufacturing techniques, but no revolutionary breakthroughs in understanding. (See "James Dyson's Electric Car," Feb. 7.)

Higgs boson. Finding the Higgs in 2012 was a huge technological achievement for CERN at the world's largest particle accelerator. However, the real breakthrough in our fundamental understanding of nature would have been not finding it. Predicted in the 1960s, its discovery confirmed the 52-year old Weinberg-Salam Standard Model of Particle Physics (see "God Particle or Goddamn Particle?" Nov. 7, 2013).

Gravitational waves. Much as I love them and the new window they open on the universe, they were predicted in 1916. Detection just had to wait for the technology to catch up (see "Gravity Waves: Confirming a Metaphor," Feb. 25, 2016).

So what would I consider to be truly groundbreaking advances in applied science? That's easy, all I have to do is check virtually any issue of Popular Science from 60 or 70 years ago: fusion power (still 25 years away!), jetpacks and flying cars, sentient robots, vertical urban farms, synthetic meat, moon colonies, anti-aging pills, really efficient batteries. Teleportation would be pretty cool, too.

Barry Evans ([email protected]) prefers he/him pronouns and is waiting for a gizmo to floss his teeth while he sleeps.

Speaking of...

more from the author

-

The Myth of the Lone Genius

- Jun 6, 2024

-

mRNA Vaccines vs. the Pandemic

- May 23, 2024

-

Doubting Shakespeare, Part 3: Whodunnit?

- May 9, 2024

- More »